3 reasons why schools shouldn't use an "AI Detector"

TL;DR Using AI detectors to catch student cheating is flawed. The opaque scores offer little usable evidence while frequently mislabeling texts as AI-generated or missing cheaters entirely. Instead of scanning content, analyzing composition like time spent and revision history provides robust detection and insights to enable educators to have meaningful discussions with students about potential AI use.

The Problem

With ChatGPT, getting 90th percentile answers for exam or homework questions has become as easy as clicking a button. Surveys show up-to 89% of students are already using it for their school work. Although AI should be permitted in some classes, it should not be allowed in all classes. Here we discuss 3 problems why using an AI originality score alone is misleading.

Reason 1. High False Positives

False Positives = Labeling honest students as cheaters

When a model wrongly attributes a text to AI instead of a human, it is known as a false positive. Instructors find this scenario particularly troubling because incorrectly labeling an honest student a cheater is considered by many to be worse than not catching cheaters.

Some current AI detection methods claim at least a 4% false positive rate. This means that in a class of 25 or more students, at least one student will be wrongly labeled as a cheater. The consequences of this can be severe as it undermines not only the reputation of the institution and the instructor, but also may result in legal action or lawsuits by the affected students.

This means that in a class of 25 or more students, at least one student will be wrongly labeled as a cheater.

Reason 2. No actionable insights

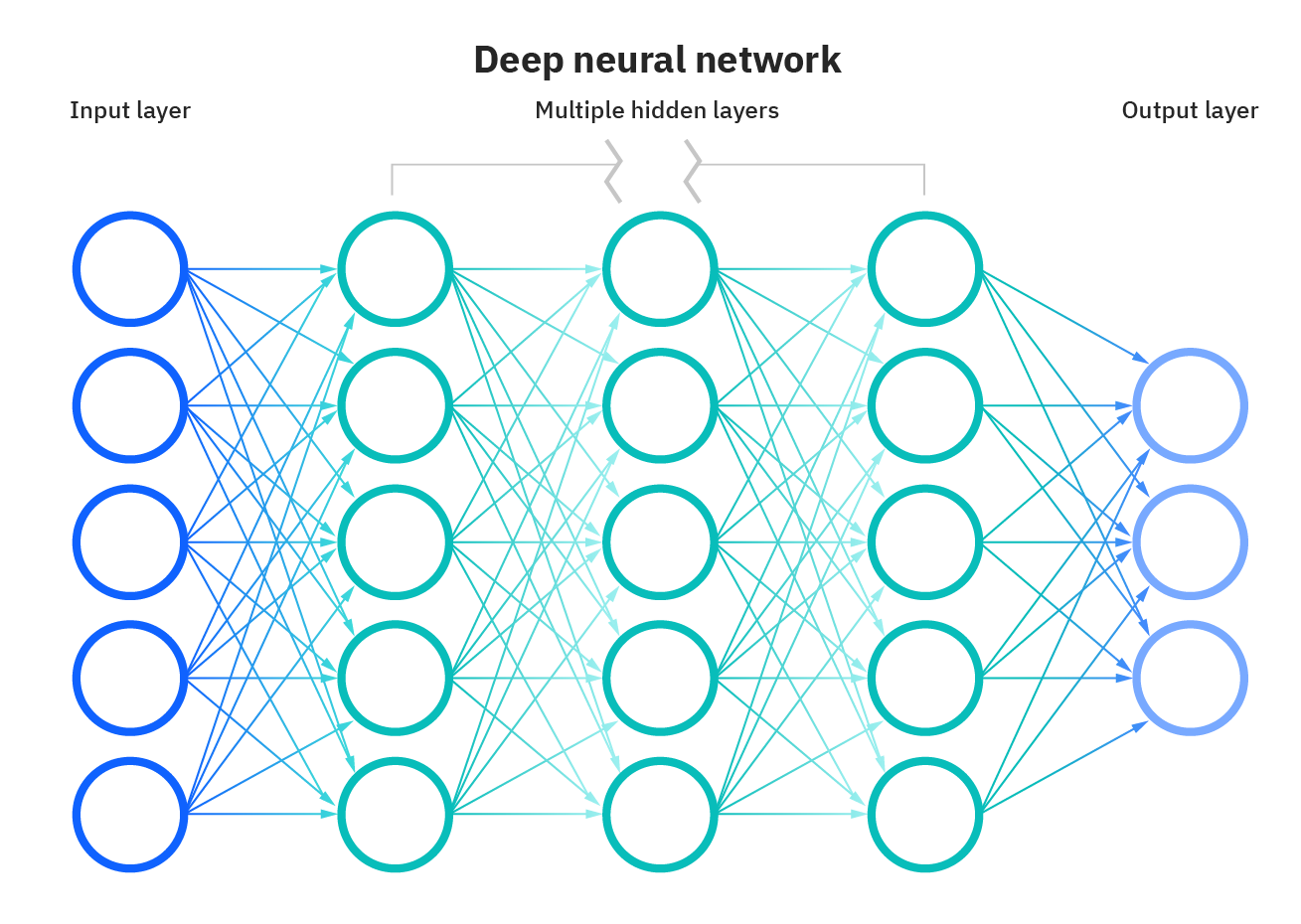

When an AI model assigns a percentage to indicate the likelihood a text is AI-generated, it does so using a deep neural network that operates like a black box, providing no visibility into how it arrived at that score.

Deep learning models are comprised of complex neural networks with millions of nodes that data flows through during training and prediction. This underlying structure learns subtle patterns that distinguish AI-written text from human writing. To improve accuracy, AI detectors are trained on thousands of texts from both AI systems and people. AI-generated writing tends to have a more formulaic style compared to the unique stylistic fingerprints of human authors.

Unlike plagiarism detection where copied passages can be highlighted, an opaque AI score offers no evidence to facilitate discussion. With only a number from an inscrutable black box, instructors lack concrete details to substantiate claims of AI authorship with students. Meaningful dialogue about potential AI use is obstructed without additional insights into how scores are determined.

Reason 3. High False Negatives

False Negatives = Not catching cheaters

AI text detectors are highly unreliable when it comes to accurately identifying AI generated text, which is the most common situation. Scientific research has proven that with a highly advanced model, it is impossible to correctly identify AI generated text. Moreover, minor adjustments or minimal effort in rephrasing can cause existing detectors to fail in identifying AI text almost entirely. The inability to discern AI generated text can damage schools' reputation and be unfair to students who have adhered to assignment guidelines and actually put in the work for their classes.

Case Study

"Vanderbilt has decided to disable Turnitin’s AI detection tool for the foreseeable future."

On August 16th, 2023 Vanderbilt University officially stopped using an AI detector exactly for the reasons highlighted above stating that "To date, Turnitin gives no detailed information as to how it determines if a piece of writing is AI-generated or not. The most they have said is that their tool looks for patterns common in AI writing, but they do not explain or define what those patterns are." and concluded that "we do not believe that AI detection software is an effective tool that should be used."

AI detectors have been found to be more likely to label text written by non-native English speakers as AI-written - the guardian

What's the Solution

Rather than focusing on the content, our approach is to analyze the manner in which a text is composed. This entails assessing factors such as the duration spent on essays, the revision history and patterns, and evaluating instances of copy-pasting. This approach is not only more difficult to circumvent, but, more significantly, the metrics derived from this analysis enable meaningful discussions between educators and students. Additionally, the comprehensive trail history of the writing can be utilized as tangible evidence of effort. At Rumi, we employ this method not only to provide an originality score but also to offer a detailed breakdown of the score for further examination and discourse.